Experimental design is a structured approach used to conduct scientific experiments. It enables researchers to explore cause-and-effect relationships by controlling variables and testing hypotheses.

This guide explores the types of experimental designs, common methods, and best practices for planning and conducting experiments.

Experimental Design

Experimental design refers to the process of planning a study to test a hypothesis, where variables are manipulated to observe their effects on outcomes. By carefully controlling conditions, researchers can determine whether specific factors cause changes in a dependent variable.

Key Characteristics of Experimental Design:

- Manipulation of Variables: The researcher intentionally changes one or more independent variables.

- Control of Extraneous Factors: Other variables are kept constant to avoid interference.

- Randomization: Subjects are often randomly assigned to groups to reduce bias.

- Replication: Repeating the experiment or having multiple subjects helps verify results.

Purpose of Experimental Design

The primary purpose of experimental design is to establish causal relationships by controlling for extraneous factors and reducing bias. Experimental designs help:

- Test Hypotheses: Determine if there is a significant effect of independent variables on dependent variables.

- Control Confounding Variables: Minimize the impact of variables that could distort results.

- Generate Reproducible Results: Provide a structured approach that allows other researchers to replicate findings.

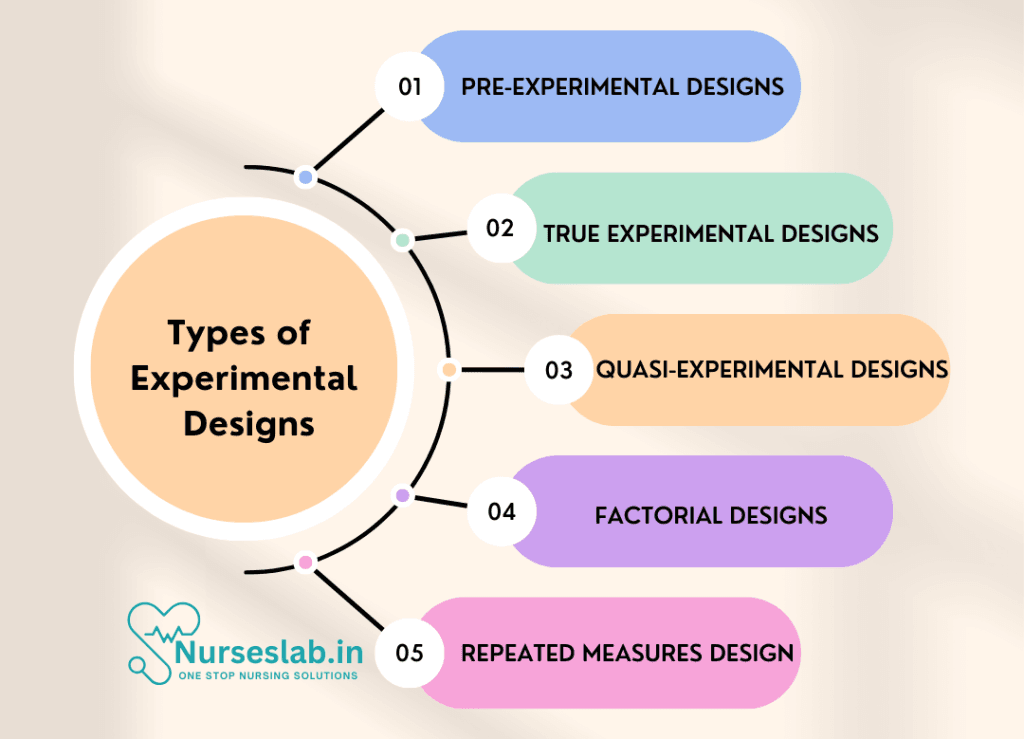

Types of Experimental Designs

Experimental designs can vary based on the number of variables, the assignment of participants, and the purpose of the experiment. Here are some common types:

1. Pre-Experimental Designs

These designs are exploratory and lack random assignment, often used when strict control is not feasible. They provide initial insights but are less rigorous in establishing causality.

- One-Shot Case Study: Observes the effect of an intervention on a single group without comparison to a control.

- Example: A training program is provided, and participants’ knowledge is tested afterward, without a pretest.

- One-Group Pretest-Posttest Design: Measures a group before and after an intervention.

- Example: A group is tested on reading skills, receives instruction, and is tested again to measure improvement.

2. True Experimental Designs

True experiments involve random assignment of participants to control or experimental groups, providing high levels of control over variables.

- Randomized Controlled Trials (RCTs): Participants are randomly assigned to either the experimental or control group, and the effects of the intervention are measured.

- Example: A new drug’s efficacy is tested with patients randomly assigned to receive the drug or a placebo.

- Posttest-Only Control Group Design: Measures only the outcome after an intervention, with both experimental and control groups.

- Example: Two groups are observed after one group receives a treatment, and the other receives no intervention.

3. Quasi-Experimental Designs

Quasi-experiments lack random assignment but still aim to determine causality by comparing groups or time periods. They are often used when randomization isn’t possible, such as in natural or field experiments.

- Nonequivalent Control Group Design: Compares two groups where participants are not randomly assigned, using pretests and posttests.

- Example: Schools receive different curriculums, and students’ test scores are compared before and after implementation.

- Interrupted Time Series Design: Observes a single group over time before and after an intervention.

- Example: Traffic accident rates are recorded for a city before and after a new speed limit is enforced.

4. Factorial Designs

Factorial designs test the effects of multiple independent variables simultaneously. This design is useful for studying the interactions between variables.

- Two-Way Factorial Design: Examines two independent variables and their interaction.

- Example: Studying how caffeine (variable 1) and sleep deprivation (variable 2) affect memory performance.

- Three-Way Factorial Design: Involves three independent variables, allowing for complex interactions.

- Example: An experiment studying the impact of age, gender, and education level on technology usage.

5. Repeated Measures Design

In repeated measures designs, the same participants are exposed to different conditions or treatments. This design is valuable for studying changes within subjects over time.

- Within-Subject Design: The same participants experience all conditions, reducing variability due to individual differences.

- Example: Measuring reaction time in participants before, during, and after caffeine consumption.

- Crossover Design: Participants are randomly assigned to receive treatments in a different order, helping to control for order effects.

- Example: Testing two medications, with each participant receiving both but in a different sequence.

Methods for Implementing Experimental Designs

1.Randomization

Purpose: Ensures each participant has an equal chance of being assigned to any group, reducing selection bias.

Method: Use random number generators or assignment software to allocate participants randomly.

2.Blinding

Purpose: Prevents participants or researchers from knowing which group (experimental or control) participants belong to, reducing bias.

Method: Implement single-blind (participants unaware) or double-blind (both participants and researchers unaware) procedures.

3.Control Groups

Purpose: Provides a baseline for comparison, showing what would happen without the intervention.

Method: Include a group that does not receive the treatment but otherwise undergoes the same conditions.

4.Counterbalancing

Purpose: Controls for order effects in repeated measures designs by varying the order of treatments.

Method: Assign different sequences to participants, ensuring that each condition appears equally across orders.

5.Replication

Purpose: Ensures reliability by repeating the experiment or including multiple participants within groups.

Method: Increase sample size or repeat studies with different samples or in different settings.

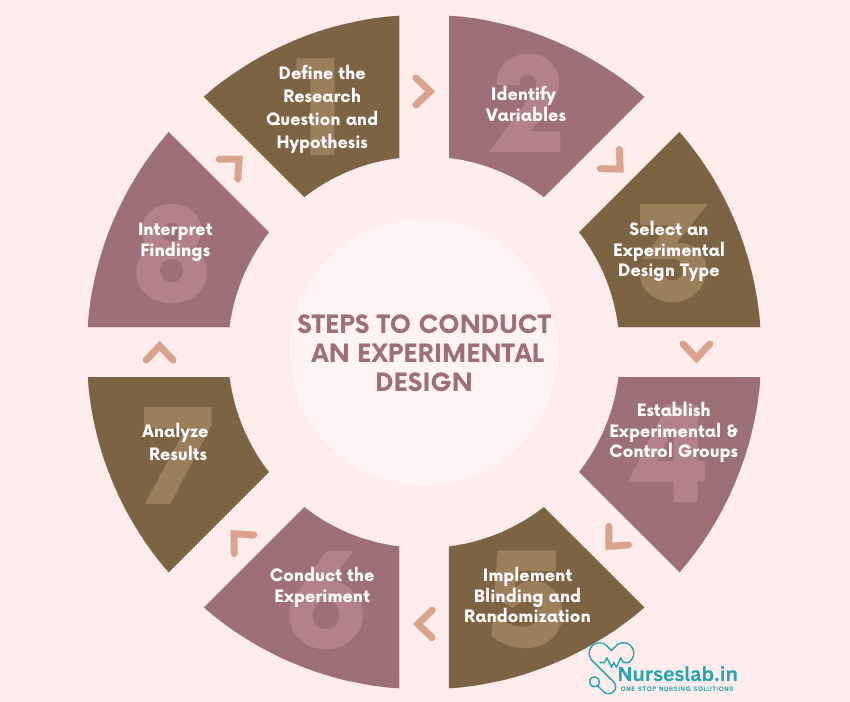

Steps to Conduct an Experimental Design

Developing an experimental design involves planning that maximizes the potential to collect data that is both trustworthy and able to detect causal relationships.

1.Define the Research Question and Hypothesis

Clearly state what you intend to discover or prove through the experiment. A strong hypothesis guides the experiment’s design and variable selection.

2.Identify Variables

Independent Variable (IV): The factor manipulated by the researcher (e.g., amount of sleep).

Dependent Variable (DV): The outcome measured (e.g., reaction time).

Control Variables: Factors kept constant to prevent interference with results (e.g., time of day for testing).

3.Select an Experimental Design Type

Choose a design type that aligns with your research question, hypothesis, and available resources. For example, an RCT for a medical study or a factorial design for complex interactions.

4.Establish Experimental and Control Groups

Randomly assign participants to experimental or control groups. Ensure control groups are similar to experimental groups in all respects except for the treatment received.

5.Implement Blinding and Randomization

Randomize the assignment and, if possible, apply blinding to minimize potential bias.

6.Conduct the Experiment

Follow a consistent procedure for each group, collecting data systematically. Record observations and manage any unexpected events or variables that may arise.

7.Analyze Results

Use appropriate statistical methods to test for significant differences between groups, such as t-tests, ANOVA, or regression analysis.

8.Interpret Findings

Determine whether the results support your hypothesis and analyze any trends, patterns, or unexpected findings. Discuss possible limitations and implications of your results.

Between-Subjects vs Within-Subjects Experimental Designs

Here is a detailed comparison among Between-Subject and Within-Subject is tabulated below:

| Between-Subjects | Within-Subjects |

|---|---|

| Each participant experiences only one condition | Each participant experiences all conditions |

| Typically includes a control group for comparison | Does not involve a control group as participants serve as their own control |

| Requires a larger sample size for statistical power | Requires a smaller sample size for statistical power |

| Less susceptible to order effects | More susceptible to order effects |

| More impacted by individual differences among participants | Less impacted by individual differences among participants |

Examples of Experimental Design in Research

- Medicine: Testing a new drug’s effectiveness through a randomized controlled trial, where one group receives the drug and another receives a placebo.

- Psychology: Studying the effect of sleep deprivation on memory using a within-subject design, where participants are tested with different sleep conditions.

- Education: Comparing teaching methods in a quasi-experimental design by measuring students’ performance before and after implementing a new curriculum.

- Marketing: Using a factorial design to examine the effects of advertisement type and frequency on consumer purchase behavior.

- Environmental Science: Testing the impact of a pollution reduction policy through a time series design, recording pollution levels before and after implementation.

Application of Experimental Design

The applications of Experimental design are as follows:

- Product Testing: Experimental design is used to evaluate the effectiveness of new products or interventions.

- Medical Research: It helps in testing the efficacy of treatments and interventions in controlled settings.

- Agricultural Studies: Experimental design is crucial in testing new farming techniques or crop varieties.

- Psychological Experiments: It is employed to study human behavior and cognitive processes.

- Quality Control: Experimental design aids in optimizing processes and improving product quality.

REFERENCES

- Bevans, R. (2023, June 21). Guide to Experimental Design | Overview, 5 steps & Examples. Scribbr. Retrieved February 9, 2025, from https://www.scribbr.com/methodology/experimental-design/

- Campbell, D. T., & Stanley, J. C. (1963). Experimental and Quasi-Experimental Designs for Research. Houghton Mifflin Company.

- Shadish, W. R., Cook, T. D., & Campbell, D. T. (2002). Experimental and Quasi-Experimental Designs for Generalized Causal Inference. Houghton Mifflin.

- Fisher, R. A. (1935). The Design of Experiments. Oliver and Boyd.

- Field, A. (2013). Discovering Statistics Using IBM SPSS Statistics. Sage Publications.

- Cohen, J. (1988). Statistical Power Analysis for the Behavioral Sciences. Routledge.

Stories are the threads that bind us; through them, we understand each other, grow, and heal.

JOHN NOORD

Connect with “Nurses Lab Editorial Team”

I hope you found this information helpful. Do you have any questions or comments? Kindly write in comments section. Subscribe the Blog with your email so you can stay updated on upcoming events and the latest articles.